Fans, AI and copyright: why not everything is allowed

Over the past few years, AI-generated content has exploded around our favorite series, games, and fictional universes. Familiar characters, instantly recognizable styles, unseen scenes — everything now seems possible, accessible, immediate.

Sommaire

- The line has been crossed

- Creating is not producing

- The fan illusion: loving a work does not grant rights

- AI as a revealer, not the cause

- The lie of “Fan Creation”

- Copyright: an imperfect but necessary framework

- The disappearance of the sacred

- Taking a stand: what I refuse

- A trace but not a solution

Most discussions focus on the technology itself: its performance, its creative promises, its disruptive potential. Far less attention is paid to what AI actually changes in our relationship with existing works.

Because beneath the enthusiasm, one question is consistently avoided:

What happens to respect for a work when reproducing it, transforming it, and spreading it no longer requires time, understanding, or commitment?

This article is not about opposing pro-AI and anti-AI positions.

You won’t find a technical breakdown or a legal guide here.

I start from a simple observation: loving a work does not grant unlimited rights, and the ease offered by AI cannot serve as an excuse for erasing the creative process, copyright, or cultural transmission.

At some point, a boundary must be drawn. Even if it is uncomfortable.

Especially if it is unpopular.

The line has been crossed

In recent months, AI-generated images have flooded social media. The phenomenon is massive, fast, and often celebrated as a creative revolution. Recognizable characters, instantly identifiable styles, familiar universes endlessly replicated: everything now seems possible, accessible… and above all, almost instantaneous.

As a trained graphic designer, I initially observed this shift with a certain distance. Like many others, I assumed the excitement would fade, that the tool’s limitations would become obvious, and that people would eventually understand that this was not a miracle, but simply another tool.

Then, gradually, a discomfort set in. Slowly, almost imperceptibly.

That discomfort was not caused by the technology itself, but by what it revealed in our behaviors. Behind these images, these videos, these so-called “creations,” something had shifted. A boundary had been crossed without ever being clearly named.

The boundary between loving a work and using it.

Between creating and producing.

Between admiration and appropriation.

We hear a lot about the democratization of creation, about newfound freedom, about the power of these tools. These narratives are everywhere: enthusiastic, sometimes even sincere.

What we hear far less about is responsibility. And that is precisely where the problem begins.

We live in a time where passion has become an argument. Loving a series, a game, or a fictional universe now seems to justify its use, transformation, and distribution. On paper, the idea is appealing: it flatters emotional attachment and gives the illusion of shared creativity. In reality, it is deeply flawed.

Loving a work grants no special rights. Neither legal nor moral ones. Understanding it doesn’t either. Knowing it by heart, having watched or played it dozens of times, being deeply shaped by it — all of this creates a bond, sometimes a powerful one, but never an authorization. Confusing attachment with legitimacy erases the very foundation of respect for a work and its creator.

This confusion sits at the core of many current AI practices. The reasoning is often implicit, but always the same:

Because I love it, I understand it.

Because I understand it, I have the right.

This is the logic I question here. Not to shame or lecture. Most people are perfectly capable of understanding what creation truly involves — the effort, the time, the responsibility behind an enduring work. This is not about targeting individuals, but about examining a practice that has become normalized.

I write this because I see uses becoming widespread that slowly, but surely, erase what gives a work its value: the time required to create it, the effort it demands, the transmission it carries, and the respect owed to those who made it.

Artificial intelligence is not responsible for everything. But it accelerates. It makes possible in seconds what once required hours of work, specific skills, and real engagement. And above all, it makes appropriation nearly invisible, almost trivial.

I do not offer a miracle solution. This text draws a line.

A clear, assumed, perhaps uncomfortable one:

Not everything that can be generated is legitimate to claim.

Not everything that is technically possible is morally acceptable.

Not everything that looks like creation actually is.

From there, the debate can begin. If we are willing to redefine what it truly means to create.

This type of tool does not invite users to create a character. It invites them to exploit an existing universe by reducing it to an instantly recognizable style. Under the guise of “creation,” AI turns protected works into features, erases the artistic process, and normalizes an appropriation that fans not only accept but increasingly push further, without even questioning it anymore.

Creating is not producing

Before talking about artificial intelligence, copyright, or contemporary abuses, we must address a fundamental confusion that pollutes nearly every current debate: the confusion between creation and production.

These two notions are now used interchangeably, despite representing profoundly different realities.

Producing is about obtaining a result.

Creating is about going through a process.

This distinction is not theoretical. It shapes our relationship with works, with authors, and now with tools.

Creating requires time. Not in a romantic sense, but in a very concrete one: time to understand what you are doing, time to face limitations, time to fail, correct, and start over.

Creating also requires personal investment, real involvement with the material, whether visual, narrative, sonic, or symbolic.

Without that time, without friction, without confrontation, all that remains is a result detached from responsibility.

This is where artificial intelligence introduces a rupture. Not because it cannot produce impressive images, texts, or videos but because it allows those results to exist without requiring any understanding of what is being used.

One can now generate a style without knowing its history.

Use characters without caring about how they were built.

Reproduce an aesthetic without reflecting on what it expresses.

The result may be seductive, sometimes even spectacular. But the process has vanished.

It is often said that AI is “just a tool.” Technically, this is correct. Intellectually, it is insufficient.

A tool is never neutral because of what it is, but because of what it allows without effort. A tool that removes slowness, learning, and constraint inevitably transforms the nature of the act it accompanies.

The real question is not whether AI is a tool, but what it enables without consequence.

In this case, it enables the mass production of images and content that give the illusion of creation without assuming its fundamental requirements.

It allows one to reach a result without understanding, to claim authorship without accepting debt, to distribute without questioning origins.

This ease is the real problem, far more than the technology itself.

A prompt, in this context, is not a creative act. It is an instruction. A command. A delegation.

Typing a few lines to ask a machine to generate a universe, a character, or a scene does not constitute creation in the strict sense. It requires no mastery of artistic language, no deep knowledge of codes, no reflection on the legal or moral limits of what is being used.

The prompt produces a result.

It does not build a work.

Some will argue that intention is enough. That imagining, formulating a request, choosing an output already counts as creation. This forgets a simple truth: intention alone has never been enough to create a work. Without confrontation with the material, without responsibility for the process, without accepting constraints, intention remains a desire, not a creative act.

Creating means taking responsibility for what you do, including what you use, transform, or divert.

This distinction becomes even more critical when dealing with existing works.

Using a style, a character, or a fictional universe implies a relationship with what came before. It requires knowing where it comes from, who created it, under what conditions, and with what intentions.

It also requires accepting that not everything is freely usable. That some things do not belong to us, even if they shaped us deeply.

By removing these steps, artificial intelligence makes that relationship optional. It allows us to ignore the history of forms, the labor of authors, and legal frameworks, while still giving the impression of a completed creative act.

This is how it deeply distorts our relationship with creation.

AI does not create too much.

It allows production without understanding, without respect, and without transmission.

And a creation that transmits nothing, that belongs to no continuity, that acknowledges no lineage, becomes nothing more than an isolated, consumable, replaceable object.

It does not enrich a collective imagination.

It exhausts it.

It does not extend a work.

It uses it.

This difference, often invisible at first glance, is decisive.

From there, the debate is no longer just about AI, but about our ability to recognize what still gives value to a creative act.

As long as producing a result is confused with creating a work, as long as effort, understanding, and responsibility are treated as optional, the boundary will continue to fade. And with it, the respect owed to the works we claim to love.

The fan illusion: loving a work does not grant rights

For a long time, fan culture was a space of genuine engagement. Creating around a work required time, skills, and a form of personal exposure. Drawing fan art, editing a video, writing a fanzine, or crafting a cosplay all involved tangible effort. One had to learn, fail, and try again. There was a cost: sometimes symbolic, sometimes very real. And above all, there was a conscious relationship with the original work.

That relationship was never neutral. It was built on an implicit form of respect, because fans knew they were operating within a universe that did not belong to them. Their creations existed in a space in between: neither fully autonomous nor purely extractive. They extended, commented on, or paid tribute to the original work, without ever claiming to replace it or to assume any form of authorship.

That fragile, but undeniably clear framework has gradually cracked.

Because today, part of fan culture operates according to a very different logic.

Engagement has been replaced by immediacy.

Effort by ease.

Understanding by visual recognition.

The goal is no longer to engage in dialogue with a work, but to use it. Artificial intelligence did not create this shift, but it has made it visible and, above all, massive.

The transition is subtle, but profound. Where the fan once occupied a position of conscious admiration, they now increasingly see themselves as a legitimate user. Loving a work becomes a sufficient argument to exploit it. Passion turns into justification. And this transformation is almost never questioned.

What follows is a recurring confusion between emotional attachment and rights of use. Because a universe mattered, because a character shaped a teenage years or accompanied a significant period of life, some now feel it is natural to freely appropriate it.

This reasoning is rarely stated explicitly, but it structures many practices:

This work helped build me, therefore it belongs to me. At least a little.

Yet this logic rests on nothing solid, neither legally nor culturally.

Loving a work does not create additional rights. It creates a relationship, sometimes intimate, sometimes long-lasting, but never an implicit authorization. Confusing these two dimensions erases the original creative labor and reduces it to a raw material: available, interchangeable, exploitable. This is precisely what AI enables when used without prior reflection.

This shift becomes particularly visible when productions generated from existing universes are claimed as personal creations. Images, videos, or scenes using licensed characters are shared, sometimes monetized, sometimes fiercely defended as original works. And when those same productions are copied, reused, or remixed by others, the reactions are immediate: accusations of theft, plagiarism, disrespect. I think you see the problem.

The paradox is striking. Claiming authorship over derivative content while denying the authorship of the original work amounts to drawing a boundary that constantly shifts in one’s own favor. This reasoning deliberately ignores an obvious truth: you cannot demand respect for rights you never acknowledged upstream.

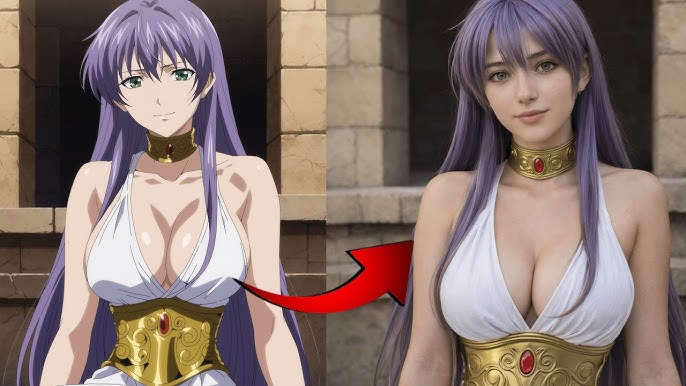

Artificial intelligence amplifies this phenomenon by removing intermediate steps. There is no longer a need to master a style in order to use it, nor to understand a universe in order to reproduce it. One simply has to recognize it, name it, and prompt it. The fan is no longer confronted with the complexity of what they admire. They consume its surface signs: its visible forms, its immediately recognizable codes.

This is where the notion of the fan fundamentally changes. We are no longer talking about an engaged amateur, but about an equipped consumer. A consumer using powerful tools to rapidly produce valorisable content, without ever questioning the legitimacy of that use.

And at that point, love for the work becomes a pretext. No longer a responsibility.

This evolution is not limited to AI. It is part of a broader movement in which everything becomes content, everything is meant to be shared, optimized, and commented on. In this context, the work ceases to be an achievement and becomes a resource. It is no longer respected for what it is, but exploited for what it can generate (usually money or, at best, visibility).

The fan illusion lies precisely in the belief that attachment is enough to erase limits.

In reality, the opposite should apply.

The more a work matters, the more care it should demand.

The more it has shaped us, the more restraint it deserves.

Rejecting this logic means accepting that what we love can be reduced to mere material. At that point, the issue is no longer just technological or legal.

It becomes cultural.

It challenges our ability to recognize what does not belong to us — even when it has profoundly shaped who we are. And as long as this distinction is not clearly reaffirmed, the boundary between homage and appropriation will continue to dissolve.

This image does not belong to DBZ Exclusives. It was generated by an artificial intelligence platform in accordance with its terms of use, then circulated without a clearly identifiable author or responsibility. It is a product of immediate recognition, designed to trigger a reflex rather than understanding. It is, in no way, a creation.

AI as a revealer, not the cause

It would be tempting to make artificial intelligence the main culprit behind all the excesses observed in recent months. That would be simple, comfortable, almost reassuring. Designating a tool as the enemy spares us a broader self-examination.

But AI did not invent these behaviors.

It revealed them, amplified them, and made them visible on an unprecedented scale.

Before AI, the same dynamics already existed. Image reuse, stylistic appropriation, and unauthorized circulation of copyrighted works were part of the digital ecosystem. What has changed is the speed, the ease, and the near-total disappearance of friction. What once required time, skills, or at least a clear intention is now accessible in seconds, without prior learning or confrontation with reality.

AI acts as an accelerator:

- it does not create the desire for appropriation, it satisfies it instantly

- it does not generate creative illiteracy, it makes it exploitable

- it does not abolish respect for works, it allows people to bypass it without immediate consequences

And it is precisely this combination that creates the problem.

Debates often focus on whether AI “steals” works or not. The reality is unfortunately much more complex.

Because the core issue is not only legal. It is cultural.

AI models have been trained on massive quantities of existing content, often without the explicit consent of creators. This fact is known, documented, and debated. Yet in everyday use, it is largely ignored or minimized.

What strikes me is not the existence of this gray area, but how easily it is accepted. Many people use these tools without ever asking where the images, styles, or universes they mobilize come from. The origin of the data becomes abstract, distant, almost unreal.

AI turns human works into an invisible raw material, disconnected from any history or effort.

By erasing the chain of transmission, AI profoundly alters our relationship with creation. It removes intermediaries, learning paths, and conscious references. Where creators once had to confront their influences, understand them, sometimes cite or assume them, AI allows the absorption of visual signs without ever acknowledging their source. This silent absorption lies at the heart of the current unease.

This is not to deny that some people use AI thoughtfully, as one tool among others within a broader creative process. Those practices exist and should be distinguished. The problem arises when the tool becomes a complete substitute for the process, when generation replaces creation, and the result is claimed without any reflection on what it mobilizes.

In this context, AI merely exposes an already weakened relationship with works. It highlights a deeper tendency: treating all cultural production as an immediately exploitable resource, detached from the conditions of its creation.

- The work ceases to be an achievement and becomes inventory.

- Style becomes a filter.

- The character, a motif.

This logic did not originate with AI. I’ve said it already. But these tools give it a ruthless efficiency. They fit perfectly into a culture of immediacy, visibility, and performance, where the value of content is measured by its ability to circulate quickly.

Within this framework, questions of respect, legitimacy, or transmission appear secondary… even inconvenient.

Artificial intelligence therefore acts as a brutal revealer. It lays bare our relationship to works, our tolerance for appropriation, and our growing difficulty in recognizing what does not belong to us. It does not force anyone to behave this way, but it makes such behaviors easier, faster, and more socially acceptable.

From that point on, continuing to debate technology alone misses the real issue. The real challenge lies elsewhere: in our collective ability to redefine what it means to use a work, to distinguish inspiration from exploitation, and to reintroduce responsibility where the tool tends to erase it.

The lie of “Fan Creation”

In recent months, a term has repeatedly been used to justify the massive use of artificial intelligence around existing universes: fan creation. The word is reassuring. It evokes passion, homage, disinterested engagement. It refers to an old and respected tradition in which fans extend a work out of love — sometimes clumsily, often sincerely.

How many times have I been insulted online for explaining that this was not an homage, but a form of theft?

How many times was I told, “You’re not a real fan”?

But what is now labeled “fan creation” often has very little to do with that tradition. Historically, fan creation involved risk. As I’ve already said, it required time, learning, and confrontation with technical and artistic limits.

Fans knew they were not creating instead of the work, but around it. They occupied an acknowledged asymmetrical relationship: admiration on one side, derivative creation on the other. This asymmetry was clear, understood, and rarely contested.

This is precisely where artificial intelligence deeply blurs the distinction.

When an image, video, or text is generated in seconds from a prompt referencing an existing universe, it is no longer a thoughtful extension, but an automated reproduction of recognizable signs. Styles, characters, and narrative codes are invoked without being understood or internalized. The gesture no longer consists in dialoguing with the work, but in extracting immediately exploitable visual elements.

The lie begins when this result is presented as a personal creation.

Because what is being claimed here is not merely attachment, but authorship. The generated content is displayed, sometimes monetized, sometimes defended as an autonomous work.

And when this content is then reused, copied, or remixed by others, the reactions are revealing: accusations of theft, plagiarism, disrespect. As if an invisible boundary had suddenly appeared. A boundary that was never respected upstream.

Claiming rights over a derivative production while ignoring those of the original work rests on a fundamental contradiction. It demands respect where none was granted. It invokes creation only when it becomes useful for personal recognition. This reasoning holds only because AI blurs the chain of responsibility.

Historically, fan creation never fully erased the source. It cited it, acknowledged it, sometimes even sacralized it. It never claimed to replace the work, let alone to substitute itself for it.

And automated generation does the opposite. It flattens differences. It transforms complex universes into reproducible, interchangeable motifs, consumed to exhaustion.

The problem is not that fans create.

The problem is that some claim creation where there has been no understanding, no engagement, and no responsibility.

The issue is not homage, but appropriation.

Not inspiration, but exploitation.

This drift is reinforced by platform-driven visibility logics. Quickly generated, immediately recognizable content circulates more easily than slow, personal, sometimes imperfect work.

Whether we like it or not, AI fits perfectly into this attention economy, where what matters is no longer what is made, but what is shown.

In this context, fan creation becomes a convenient label. It neutralizes criticism by invoking passion. It acts as a moral shield for practices that are closer to consumption than creation.

Most importantly, it avoids the only question that truly matters: what does this gesture actually bring to the original work?

A derivative creation that transmits nothing, extends no reflection, and respects no boundary does not enrich a universe. It exhausts it. It turns meaning into empty forms, repeated until saturation.

This is not an homage.

It is extraction.

By calling everything creation, the word itself is emptied of meaning. And by turning fan creation into a catch-all, we undermine what once made it precious: the ability to engage with a work without dispossessing it.

At this point, the debate inevitably becomes political and cultural. Because if automated generation is accepted as a legitimate form of creation, then nothing distinguishes the author from the user, the creator from the consumer, the work from its reproduction.

And this indistinction is not neutral. It fundamentally reshapes what we accept doing to the works we claim to love.

Copyright: an imperfect but necessary framework

The moment copyright enters the conversation, faces tighten. Eyes narrow. People clench their jaws. Positions harden fast. Copyright has become the main flashpoint for today’s tensions around artificial intelligence.

It concentrates anger, accusations of censorship, and the now-classic speeches about “creative freedom” being threatened. But this hostility says less about copyright itself than it does about our contemporary relationship with works.

What truly bothers people is not the law.

It’s the very idea of a boundary.

Because more and more, people hate boundaries.

The idea that a work is not instantly available — that it resists use, that it imposes a framework, a delay, a permission — has become almost intolerable. In an environment where everything is accessible, copyable, generatable in seconds, copyright feels like an anomaly. It reminds us that some things are not “up for grabs,” that not everything can be used freely, even with the best intentions in the world. And it’s precisely that resistance that triggers the backlash.

Hostility toward copyright is often framed as a defense of creativity. In reality, it mostly reveals a growing inability to accept something essential — and largely forgotten: the idea of cultural debt.

Using a work without asking who it belongs to, how it was produced, and what framework it exists within is treating creation as a neutral resource — detached from human responsibility.

The rejection becomes even more paradoxical when many of those criticizing copyright simultaneously demand recognition for their own output. They want credit. Protection. Respect. They denounce plagiarism, appropriation, unauthorized reuse.

So the problem isn’t the principle of limits. It’s limits when they apply to me. A logic we’ve seen for decades in politics and that has now spread into creation.

Copyright has never been perfect. It has never guaranteed fairness or prevented injustice. But it still states something essential: a work is not raw material available by default. It is the result of situated labor, produced over time — and that labor creates rights as well as duties.

With AI, this function becomes brutally visible. Tools can absorb, recompose, and distribute works at such speed that the idea of a framework starts to feel like a pointless obstacle.

But that framework does not exist to prevent creation. It exists to remind us that creation cannot exist without responsibility.

At this point, the debate is no longer truly about copyright. It is about whether we can accept that not everything is transformable, reusable, or claimable. Refuse that idea, and you accept the work ceasing to be an achievement but becoming just another resource.

And when a work no longer resists anything, when it imposes no limits, it gradually stops being a work. It becomes something far less interesting: content.

Légalement, les images inspirées d’univers protégés comme Naruto ne deviennent pas “libres” parce qu’elles ont été générées par une IA. Générer et diffuser des visuels dérivés sans accord explicite des ayants droit demeure une exploitation non autorisée de l’œuvre.

The disappearance of the sacred

There is a direct consequence to everything above. One that goes far beyond AI or copyright, because it touches something more diffuse, and more fundamental: the gradual disappearance of the sacred in our relationship to works.

By “sacred,” I do not mean religious or mystical. I mean symbolic. A space where certain things resist immediate use, where not everything is available, manipulable, or transformable without care. In this sense, the sacred is what imposes restraint. It is what makes you pause, even briefly, before acting.

For a long time, works occupied that space. Not all works, and not in the same way. But enough for a tacit respect to remain. A work could be discussed, criticized, interpreted, even sometimes diverted — yet it retained weight. It demanded time. It resisted pure consumption.

That relationship is dissolving.

When everything becomes content, the work stops being an outcome and becomes material.

It is no longer something that confronts us. It becomes something we exploit.

It is no longer carried by a story, a context, a situated human gesture.

It becomes a set of recognizable signs: ready to be recycled, optimized, redistributed.

AI accelerates this movement without being its origin. It fits into a logic already deeply installed: immediacy, constant circulation, visibility as the highest value. In that environment, what doesn’t circulate quickly disappears. What resists becomes suspicious.

What imposes limits is treated as a problem.

The sacred cannot function in that logic. By definition, it requires slowness, attention, sometimes even silence. It implies that not everything is immediately accessible that thresholds exist, frameworks exist, implicit rules exist. And those are precisely the things content culture erases.

When a work is reduced to a style, a character, an aesthetic that can be exploited instantly, it loses that dimension. It is no longer encountered. It is consumed blindly. It no longer transforms the person receiving it, because it is used to produce something else: an image, a video, visibility, sometimes monetization.

The most troubling part is that this shift has a cost.

It impoverishes our relationship to the universes we love. It replaces experience with reproduction, transmission with repetition, understanding with recognition. And it blurs the boundary between what deserves to be preserved and what can be used without restraint.

The disappearance of the sacred is not spectacular. It doesn’t arrive as a clear break. It happens through a slow, steady slide. The same way political norms erode, when what was unacceptable a few years ago becomes normal today.

Through the accumulation of “harmless” gestures. Through the gradual acceptance of the idea that everything is available, everything can be taken, everything can be transformed with no consequences.

In that context, defending a boundary is not conservatism. It is lucidity.

Recognizing that a work does not belong to us, even when it shaped us, preserves what gives it value. Not by freezing it, but by accepting that it cannot be fully dissolved into our uses.

What the disappearance of the sacred reveals is not a lack of creativity. It is a loss of connection to long time.

A growing inability to accept that some things demand more than a prompt, more than a click, more than an intention. A work only fully exists if it can still resist.

And when it no longer resists, when it no longer stands in the way of immediate use, it stops being a work. It becomes one more element in a continuous, undifferentiated flow where everything is equivalent and nothing holds.

This is where the real stakes of AI in creation are being decided today. Not in tool performance but in our ability to preserve zones of restraint, respect, and transmission.

Content circulates. The sacred disappears.

And with it, an essential part of what used to make meaning.

Taking a stand: what I refuse

At this stage, it would be tempting to propose solutions: rules, guidelines, an ideal framework. But that is not the point of this text.

I am not a legislator. Not an arbiter. Not the guardian of universal morality.

I’m not writing to tell others what they should do.

I’m writing to state what I refuse.

I refuse the idea that everything that can be generated is legitimate to claim. Technical possibility does not create rights and it does not create value. Producing an image, video, or text from an existing universe does not make it a full creation, especially when the process erases any understanding of what is being used.

I also refuse to treat love for a work as a free pass. Being shaped by a universe, recognizing yourself in it, integrating it into your life story does not authorize you to seize it without restraint or thought. On the contrary: the more a work matters, the more precautions it should demand.

Rejecting that principle turns attachment into justification, and passion into an argument.

Finally, I refuse to participate in a logic where creation is reduced to optimizing recognizable signs — where style becomes a filter, a character becomes a reusable silhouette, a universe becomes an interchangeable backdrop. This logic does not enrich works. It weakens them by slowly emptying them of what made them singular.

These refusals are not a universal path or a rule for everyone. They are a personal line. A way to keep looking at certain works without feeling like I’m betraying them.

This is not about rejecting AI as a whole, nor denying it can be integrated into thoughtful creative processes. It is about refusing AI as a substitute for the process as a permanent shortcut, as a way to escape the responsibility every creative gesture implies.

Owning that position comes with consequences: disagreeing with widely adopted practices, being seen as rigid or outdated, not joining certain trends even when they’re rewarded, visible, encouraged.

But to me, that relative marginality is a small price to pay if it preserves coherence.

Because deep down, the question is not what is allowed or forbidden. It is whether we can keep looking at a work, talking about it, transmitting it without having contributed to its dilution.

Taking a stand here does not mean standing above others. It means refusing to hide behind the tool, the trend, or the convenience. Many people do it without thinking. I don’t.

We have to accept that certain limits exist. Even when they’re unpopular, even when they slow us down, even when they force us to renounce something.

This refusal is not an end.

It is a condition.

The minimal condition for still calling certain things works and not just content.

A trace but not a solution

This text does not offer a definitive answer. It does not aim to close the debate, and certainly not to pacify it. It leaves certain questions open on purpose, because those are the ones each person must face alone.

There is no comfortable position here. Only choices.

AI will keep evolving. Tools will become more powerful, more accessible, more embedded in daily practice. Uses will multiply. Boundaries will keep shifting. That much is inevitable.

But what remains possible is refusing to accept everything as self-evident.

It is possible to refuse certain conveniences.

To slow down when everything demands acceleration.

To acknowledge that a work does not belong to us. Even when it shaped us.

There is nothing heroic about that refusal. It’s discreet, sometimes invisible. It doesn’t protect the world, or works, or creation itself. It simply protects personal coherence: the ability to keep looking at certain universes without the feeling that you helped drain them of meaning.

The real question is not whether AI will replace creators, or whether copyright will survive in its current form. The question is simpler (and perhaps more disturbing): what are we willing to do to the works we claim to love, now that nothing forces restraint anymore?

In a world where everything can be generated, reproduced, shared, and claimed in seconds, the true rarity is no longer creativity. It is responsibility. The ability to say no. To draw a line. To accept that a work can sometimes be worth more than what it allows you to produce.

This text asks no one to follow it. It simply indicates a line.

Here is where I stand.

Here is what I refuse.

Here is what I still respect.

The rest belongs to each of us.

I let you think about it …